Introduction

We are now using MooseFS distributed filesystem for more than 3 years in production. A frequent question is : how is your feedback managing a petascale distributed filesystem using MooseFS ? This article aims to answer (objectively if possible) to this question.

Why MooseFS ?

Short answer is : in 2010, based on our use cases, requirements and experiments, it was the only distributed filesystem suitable for our needs. A more detailed answer is available in the article Distributed File System on Cersat Platform

Quick summary (for the impatients)

Here is a short list of pro/cons using MooseFS at petascale over years. This list is based on our experiences using MooseFS up to version 1.6.27 (some issues may now have been fixed).

Pros :

- ease of use : very easy to install, manage, monitor

- data safety and availability : per-file replication allows to just unplug a problematic node without incidence for the users (as long as replication goal is > 1).

- scalability : horizontal scalability is simple and efficient (adding more disk also increases overall performances for distributed processing)

- reliability : no real issue with MooseFS reliability itself. And a clear and clean design helps in case of issues to restart the system

- NFS-access-like : works well, but requires installing a specific driver on each client (usually compiles without issue for old and recent distributions)

- advanced features like global trash, snapshots sometimes help

- professional support is now available (and very helpful in critical cases).

Cons :

- data replication works only by replication. It has some advantages compared to RAID5-like solutions, but costs a lot more RAID5-like solutions at petascale...

- 1 heavy loaded node can slow down the whole platform... (seems to be improved in version 1.6.27). Note: each node runs also users distributed processings, not only the mfschunkserver process.

- POSIX norm partially implemented : can occasionnaly be an issue with locks for example

- professional support price, based on € per disks is not affordable for us. But commercial team can hopefully have some flexibility in some cases with research institutes like us.

Notes/Tips :

- we don't focus on performances, our requirements were different. Althought it does work well and with high performances thanks to file chunks, replication and horizontal scalability, other filesystems are certainly far more efficient. To be honest I still wonder why we are so far than theorical estimations for performances... but we could live with it until now anyway.

- mfsmaster : Have enough RAM memory free on your moosefs master (to avoid for example side effects like swapping when mfsmaster dumps its index while forking..)

- mfsmaster : since it is not multi-threaded, a single core CPU with High Frequency is better than a quad-core with lower frequency.

Usage

Storing hundreds of terabyte of data is sometimes a challenge. Using them efficiently is another one. We currently use this distributed filesystem to cope with these two requirements.

On our platform, each node runs a MooseFS data store (called mfschunkserver), but also runs processing ( each node

Note: For our use case which is basically storing and using tons of megabyte to gigabyte files basically, MooseFS has a good behaviour. But, in my opinion, MooseFS is not to be considered as a "general purposes filesystem". For example, it is not well suited when working with tons a very little files like mails, little processing logs, metadata or other kilobyte scale files. : such use case will require too much RAM memory in the moosefs master index. Also, POSIX is not completely implemented, this can be an issue when working with system locks or advanced usages.

Current state

TODO : mettre un screenshot de la console de monitoring? Indiquer le nombre et type de serveurs etc...

Installation

Definitively a good point : in just a few minutes you can have a usable distributed filesystem running with hundreds of terabytes, monitoring console, and a few clients... and won't have to change anything until you reach petascale issues !

Adding more storage capacity is also really easy : just install and start the moosefs mfschunkserver software (after having configured the disks available for moosefs use). That's all, your new chunkserver is now available, registered in web monitoring console, and data load-balancing will start soon to put data on this new node to free space on other nodes.

Just take care to correctly estimate your moosefs master node, especially for CPU and Memory.

As an example, we are currently using a Dell R620 for this, with Intel(R) Xeon(R) CPU E5-2643 0 @ 3.30GHz, 64GB Memory, and raid1 mirroring to store critical moosefs metadata (/var/lib/mfs)

Horizontal scalability

We scale our platform from 10TB to 3PB without major issue and without human intervention to manage data balancing (except when removing storage nodes).

MooseFS versions upgrades

Hmm... we don't like it very much : upgrading a piece of software which manages all your data archives (>1PB data) makes us anxious. MooseFS upgrade procedure is simple, and should work well in most cases. But we had bad luck two times (our fault somehow), so we now check, re-check, and re-re-check before doing anything... and we also avoid upgrading before new version has been used for a long time by other users ;-)

Maintenance

It does not require a lot of maintenance in usual cases. We had some big trouble with previous versions when 1 heavy-loaded node could broke down the whole platform performances by a factor of 10 or more. Recent experiences are quite good on this. Remaining maintenance is about some files beeing corrupt without apparent reason (probably related to load balacing / replication), but it is concerning about 1500 files over 4 years of use (system currently hosts 120 millions of files).

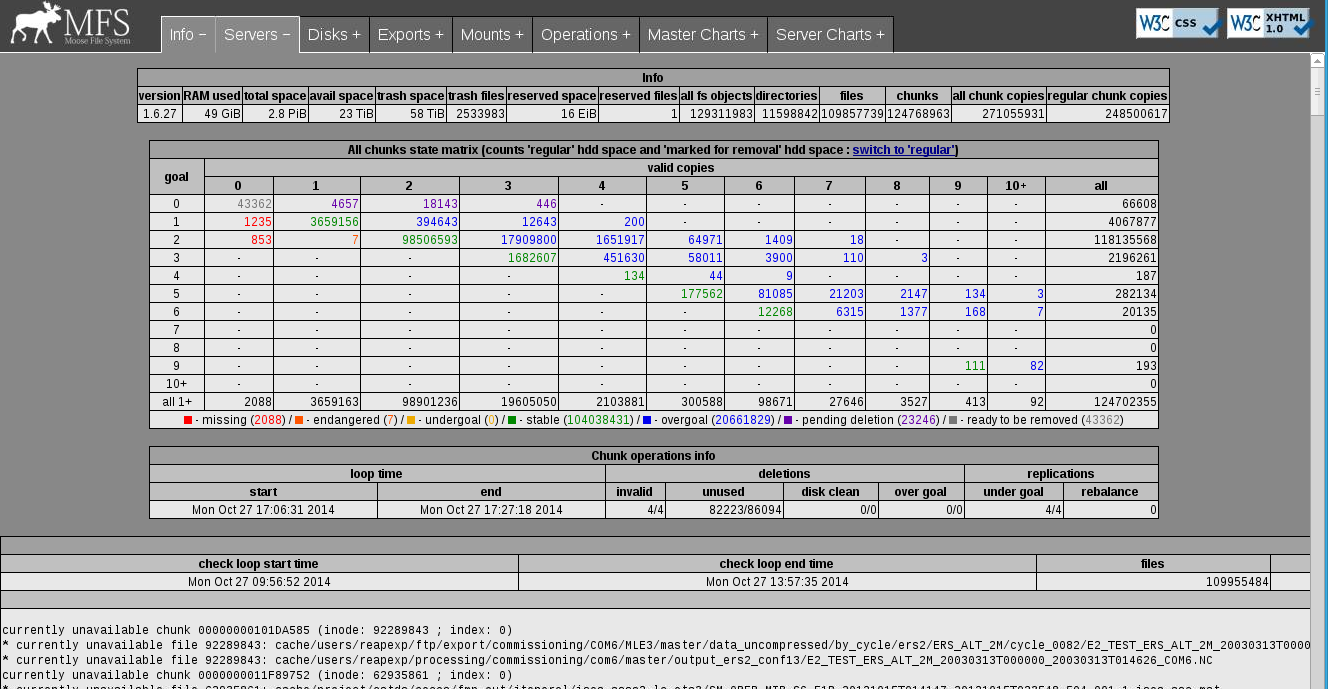

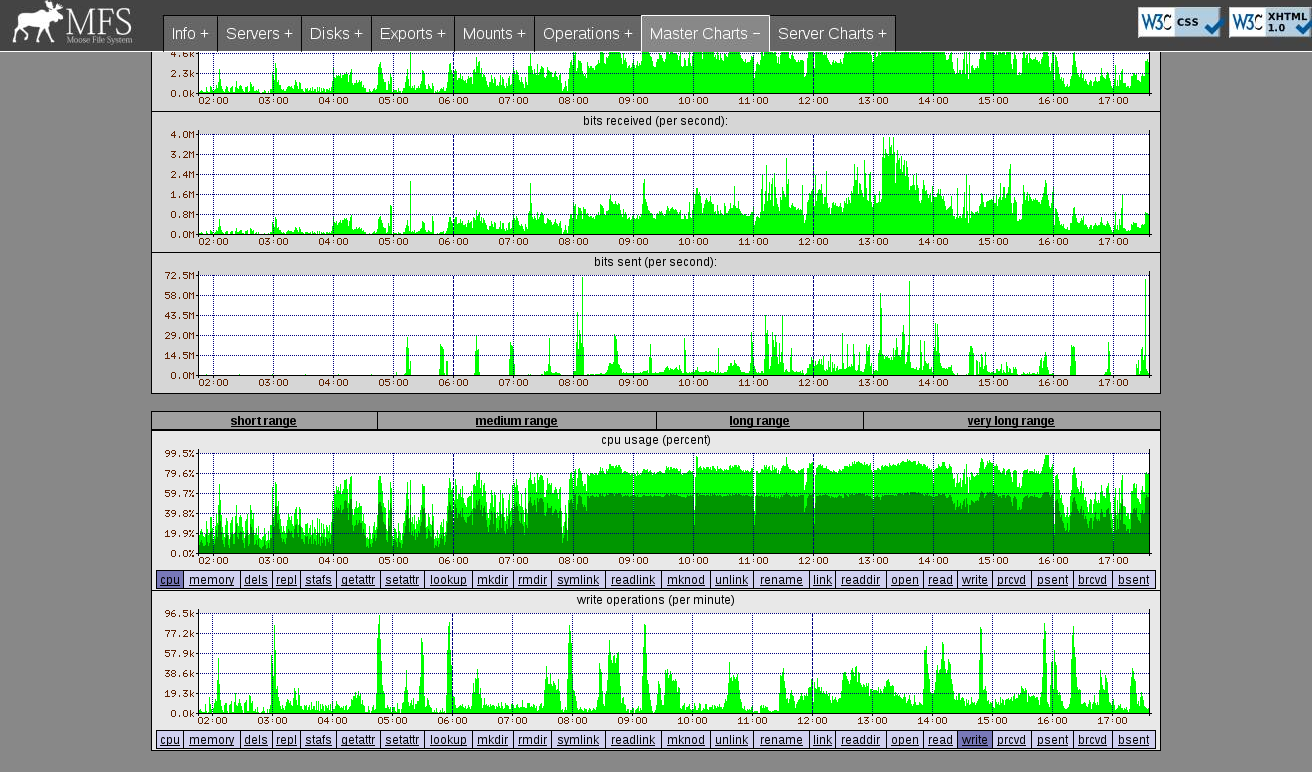

Monitoring

MooseFS comes out of the box with a useful web monitoring interface, which details cluster state and provides charts for master and chunkservers.

Our remaining issues

- Our MooseFS filesystem now stores most of our data. We would like some data to be accessible through FTP or HTTP, but Ifremer security rules does not allow having an intranet filesystem accessible from Internet public ftp/web servers, except if mounted in read-only using NFS from DMZ servers. This is not possible with MooseFS, and is really an issue for us. We tried some gateway serving through NFS the MooseFS filesystem to DMZ servers, but it is clearly not stable and really poor in performances.

Specific issues we had

(voir avec Oliv les 2/3 gros crash qu'on a eu)

Comments

comments powered by Disqus